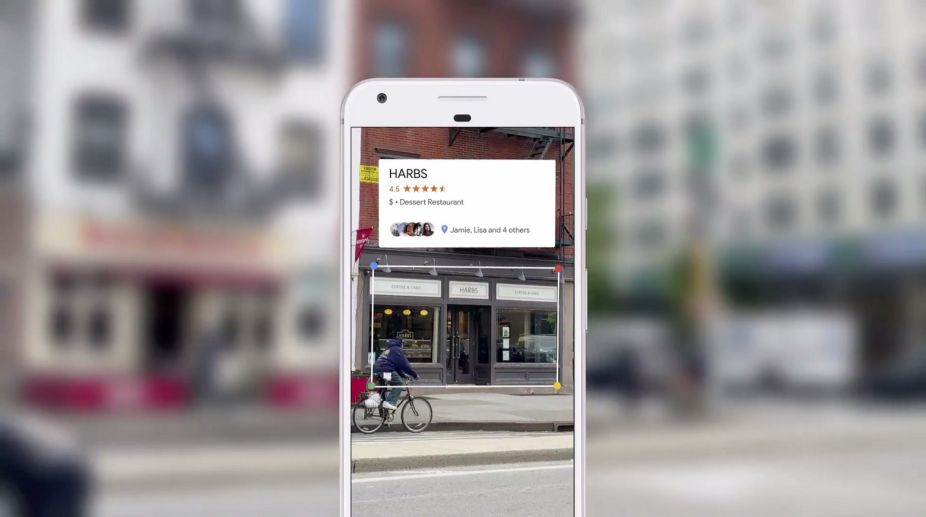

Google has reportedly started rolling out visual search feature ‘Google Lens’ in Assistant for the first batch of Pixel and Pixel 2 smartphones, which the company had announced during Google I/O October 4 event. It has been designed to bring up relevant information using visual analysis.

“The first users have spotted the visual search feature up and running on their Pixel and Pixel 2 phones,” 9to5Google reported late on Friday.

Advertisement

The visual search feature first made a debut in Google Photos on the Pixel 2 devices before expanding to the original Pixel and Pixel XL late last month. Google during the keynote announced that Google Lens would be coming as a preview to Pixel devices later this year.

Built into the Photos app, Google Lens can recognise things like addresses and books, among others. In Photos, the feature can be activated when viewing any image or screenshot.

However, in Google Assistant, it is integrated right into the sheet that pops up after holding down on the home button.

“Lens was always intended for both Pixel 1 and 2 phones,” Google had earlier said in a statement.

(Written with inputs from IANS)