Sensory encoding is the accurate and faithful depiction of the outside world using different sensors whereas perception is how the brain’s computational machinery interprets those signals.

Thus, there is a fundamental difference between sensory encoding and perception. Machines are far superior to the human eye when it comes to measuring reflected wavelengths of light within each scanned pixel. However, the visual areas of the brain can “interpret” these wavelengths as specific colours, hues and shades.

Advertisement

One major difference between machine sensing algorithms and the human nervous system is that the latter is not a one-way information channel. Based on experience, our brains construct expectations about the outside world. When sensory information enters the nervous system, it is compared with existing expectations and perceived accordingly. Thus, unexpected events, which can be potentially dangerous, are amplified whereas expected sensory input is reduced in amplitude.

There are several important clinical consequences of this phenomenon spanning the different sensory modalities. First, patients who cannot construct accurate expectations often experience hallucinations — for example schizophrenics report hearing voices because they cannot predict the sound of their own voices when they speak.

Second, in patients who have lesions in their nerves and therefore cannot sense accurately, the expectations are not met and this faulty sensory information is amplified resulting in chronic pain. For example, lesions in nerves used to convey touch signals often lead to painful sensations evoked by normally non-painful touch —a chronic condition known as neuropathic pain.

A recent study published in the journal Nature Communications by our group at the Hertie Institute of Clinical Brain Research at the University of Tübingen shows how these expectations are matched to actual sensory experiences within the neural circuits of the rat brain and identifies one specific location where such computations are performed.

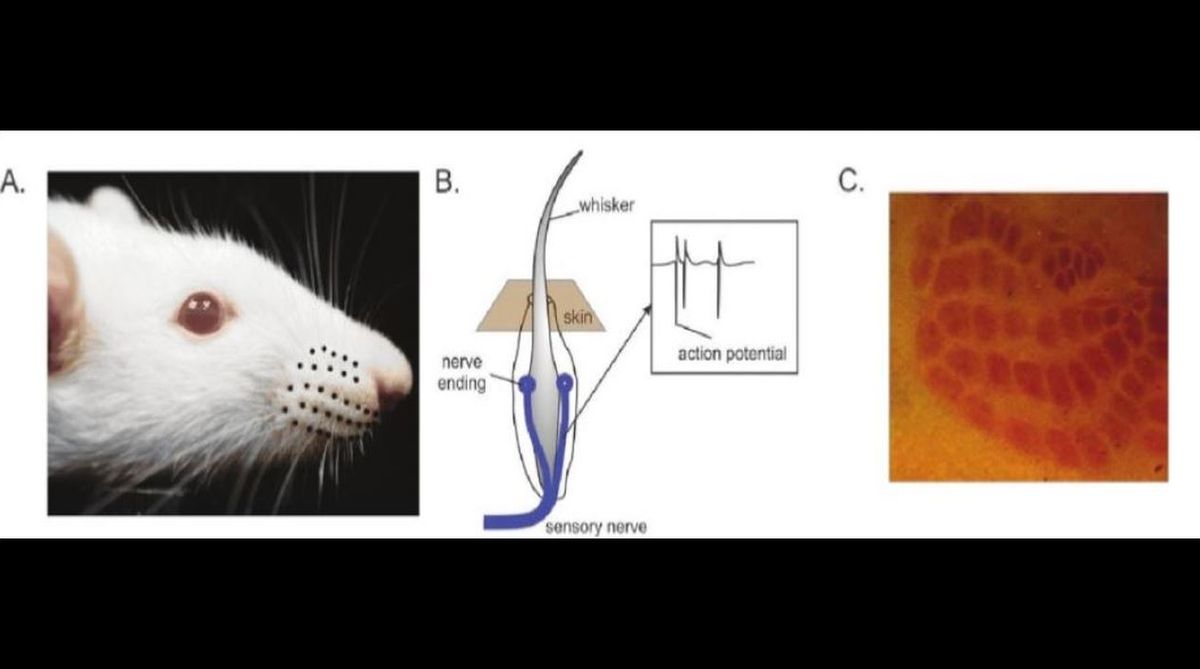

Rodents have an array of whiskers on their snouts with which they feel or sense objects around them (Figure A). Rats and mice can tell the difference between two sandpapers of different roughness by stroking the surface with their whiskers. Each whisker is a conical hair embedded inside the skin within a bundle of nerve endings (Figure B).

Whenever a whisker contacts an object and it is bent, these nerve endings around the shaft are activated mechanically and lead to electrical impulses in the nerve. These electrical impulses, called action potentials (Figure B, inset), are then conveyed to the higher areas of the rat brain via three different relays at different hierarchical stages of the nervous system — one in the brainstem, one higher up in the thalamus and finally one in the cerebral cortex.

Each relay or “synapse” is a junction of two nerves and an action potential arriving at the end of one nerve excites the terminals of the other via electrochemical means. One aspect of the rodent nervous system, which makes it a great animal model to study, is its topography. Clusters of nerve cells, each responding to deflections of one particular whisker are distinct from one another allowing us to accurately target them with recording electrodes. These topographically arranged neuronal clusters form a map of the whiskers, which can be seen imprinted on the brains surface stained with specific markers (Figure C).

Sensory responses in the whisker responsive neurons of the rodent cortex are affected by self-movement. The same neuron responds to a whisker deflection of the same intensity with fewer action potentials when the animal moves its whiskers. This is because the brain computes expectations based on its own actions. When the animal moves its whiskers to feel a novel object, the whisker nerve endings are activated merely because of the animals own movement.

However, this activity is expected by the nervous system and it seeks to suppress it so that the more interesting, unexpected activity such as that due to contact with the novel object can then be amplified and perceived. In doing so, the nervous system effectively “gain modulates” itself by dynamically adjusting its own sensitivity depending on context. If this was indeed the case, such gain modulation would most probably be under the control of the cerebral cortex, where such predictions are known to be computed — an idea that has not been tested until now.

We trained rats to touch objects by moving their whiskers, while recording the activity of single neurons using an array of fine electrodes connected to large amplifiers. Object contacts when the whiskers were in motion, indeed evoked fewer action potentials than identical contacts when the whiskers were at rest. We found this very early on in the rodent nervous system in the brainstem at the first relay or synapse.

But where did this gain modulation originate? Using fluorescent dyes injected in this area we showed that these neurons received direct connections from the cerebral cortex. Could these connections be the ones mediating the gain modulation? To test this, we made big lesions in the whisker responsive area of the rodent cerebral cortex —the primary somato-sensory cortex, an area analogous to the hand representation in humans. This area is connected with movement generating regions of the brain (motor cortex) and is a possible candidate for generating expectations. Animals with lesions now showed no gain modulation at all — responses during whisker motion and rest were identical!

Our study has important implications on how we think about sensory processing. Traditionally, sensory processing has been seen as a passive process —external stimuli are encoded by nerve impulses. We show that higher brain areas like the cortex actually change how the external world is perceived by actively altering the representation of the world using direct modulation of neural activity at early synapses.

In the clinical applications mentioned earlier, the same brain area that we recorded from was shown to be involved. Schizophrenics are known to have abnormal wiring patterns in wide regions of cerebral cortex whereas in chronic pain, severing the connections of primary somato-sensory cortex to the brainstem, as we did, can suddenly abolish these painful sensations due to normal touch.

While machine sensing opens up new possibilities, it is still very far away from neural processing. Intelligent machines can only be designed when we know more about the computations occurring in the nervous system and implement those processing strategies in electronic devices. Our study is a small step in that direction.

The writer is project leader, Hertie Institute of Clinical Brain Research, Tübingen, Germany.