Watching a 50th-anniversary screening of 2001: A Space Odyssey, I found myself, a mathematician and computer scientist whose research includes work related to artificial intelligence, comparing the story’s vision of the future with the world today.

The movie was made through a collaboration with science fiction writer Arthur C Clarke and film director Stanley Kubrick, inspired by Clarke’s novel Childhood’s End and his lesser-known short story The Sentinel. A striking work of speculative fiction, it depicts —in terms sometimes hopeful and other times cautionary —a future of alien contact, interplanetary travel, conscious machines and even the next great evolutionary leap of humankind.

Advertisement

The most obvious way in which 2018 has fallen short of the vision of 2001 is in space travel. People are not yet routinely visiting space stations, making unremarkable visits to one of several moon bases, nor travelling to other planets. But Kubrick and Clarke hit the bull’s eye when imagining the possibilities, problems and challenges of the future of artificial intelligence.

What can computers do?

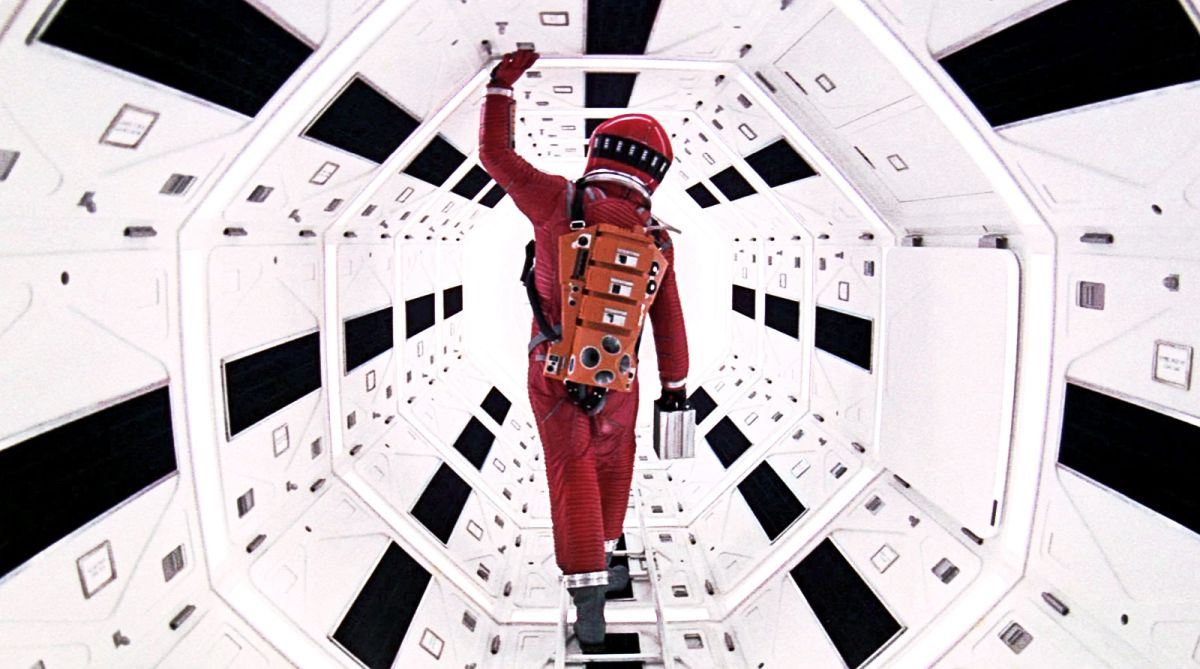

A chief drama of the movie can in many ways be viewed as a battle to the death between human and computer. The artificial intelligence of 2001 is embodied in HAL, the omniscient computational presence, the brain of the Discovery One spaceship — and perhaps the film’s most famous character. HAL marks the pinnacle of computational achievement — a self-aware, seemingly infallible device and a ubiquitous presence in the ship, always listening, always watching.

HAL is not just a technological assistant to the crew, but rather — in the words of the mission commander Dave Bowman —the sixth crew member. The humans interact with HAL by speaking to him, and he replies in a measured male voice, somewhere between stern-yet-indulging parent and well-meaning nurse. HAL is Alexa and Siri — but much better. HAL has complete control of the ship and also, as it turns out, is the only crew member who knows the true goal of the mission.

Ethics in the machine

The tension of the film’s third act revolves around Bowman and his crewmate Frank Poole becoming increasingly aware that HAL is malfunctioning, and HAL’s discovery of these suspicions. Dave and Frank want to pull the plug on a failing computer, while self-aware HAL wants to live. All want to complete the mission.

The life-or-death chess match between the humans and HAL offers precursors of some of today’s questions about the prevalence and deployment of artificial intelligence in people’s daily lives.

First and foremost is the question of how much control people should cede to artificially intelligent machines, regardless of how “smart” the systems might be. HAL’s control of Discovery is like a deep-space version of the networked home of the future or the driverless car. Citizens, policymakers, experts and researchers are all still exploring the degree to which automation could —or should —take humans out of the loop. Some of the considerations involve relatively simple questions about the reliability of machines but other issues are more subtle.

The actions of a computational machine are dictated by decisions encoded by humans in algorithms that control the devices. Algorithms generally have some quantifiable goal, toward which each of its actions should make progress —like winning a game of checkers, chess or Go. Just as an AI system would analyse positions of game pieces on a board, it can also measure efficiency of a warehouse or energy use of a data centre.

But what happens when a moral or ethical dilemma arises en route to the goal? For the self-aware HAL, completing the mission — and staying alive — wins out when measured against the lives of the crew. What about a driverless car? Is the mission of a self-driving car, for instance, to get a passenger from one place to another as quickly as possible — or to avoid killing pedestrians? When someone steps in front of an autonomous vehicle, those goals conflict. That might feel like an obvious “choice” to program away, but what if the car needs to “choose” between two different scenarios, each of which would cause a human death?

Under surveillance

In one classic scene, Dave and Frank go into a part of the space station where they think HAL can’t hear them to discuss their doubts about HAL’s functioning and his ability to control the ship and guide the mission. They broach the idea of shutting him down. Little do they know that HAL’s cameras can see them — the computer is reading their lips through the pod window and learns of their plans.

In the modern world, a version of that scene happens all day every day. Most of us are effectively continuously monitored, through our almost-always on phones or corporate and government surveillance of real-world and online activities. The boundary between private and public has become, and continues to be, increasingly fuzzy. The characters’ relationships in the movie made me think a lot about how people and machines might coexist, or even evolve together.

Through much of the movie, even the humans talk to each other blandly, without much tone or emotion — as they might talk to a machine or as a machine might talk to them. HAL’s famous death scene —in which Dave methodically disconnects its logic links — made me wonder whether intelligent machines will ever be afforded something equivalent to human rights. Clarke believed it quite possible that humans’ time on Earth was but a “brief resting place” and that the maturation and evolution of the species would necessarily take people well beyond this planet.

2001 ends optimistically, vaulting a human through the “Stargate” to mark the rebirth of the race. To do this in reality will require people to figure out how to make the best use of the machines and devices that they are building, and to make sure we don’t let those machines control us.

The writer is professor, department of mathematics, computational science, and computer science, Dartmouth College, US. This article was first published on www.theconversation.com