UP teacher held for thrashing boy, leaving him unconscious with broken tooth

According to reports, the teacher thrashed the boy so badly that he was knocked unconscious and collapsed on the floor. The teacher then fled the scene.

The animal brain is being simulated in the physics lab to analyse large amounts of data.

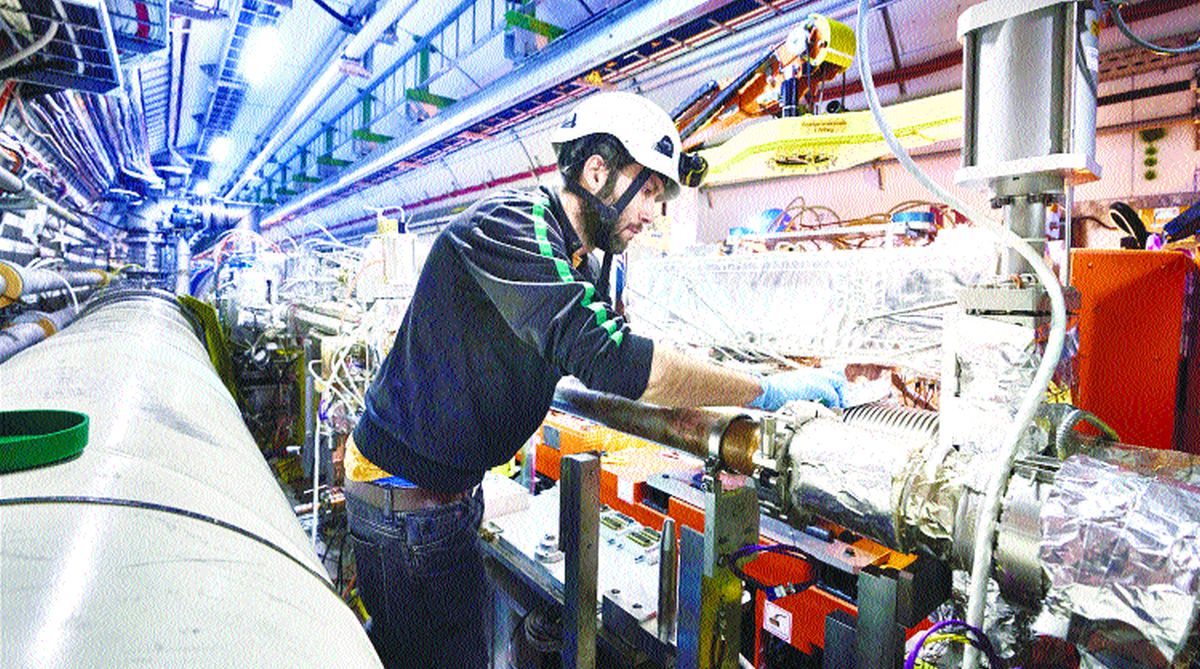

The LHC at Cern near Geneva.

Research in the frontiers of physics works with very high energy reactions, which generate quantities of data that we have not encountered before.

Alexander Radovic, Mike Williams, David Rousseau, Michael Kagan, Daniele Bonacorsi, Alexander Himmel, Adam Aurisano, Kazuhiro Terao and Taritree Wongjirad, from Universities in France, Italy and the US, in the journal, Nature, review the challenges and opportunities in using machine learning for dealing with this avalanche of information.

Physics, for all its success, remains an incomplete study. The standard model of particle physics, which describes the known fundamental particles, is unprecedented in the accuracy of its predictions at the very small scale. But this model does not deal with the force of gravity.

Advertisement

Gravity has negligible effect at the very fine scale, because masses are so small and electrical or nuclear forces are so much stronger but the theory is not suited for large masses and distances, where the force of gravity dominates.

Even at this small scale, there are loose ends, which need to be tied up. The properties of the neutrino, a very light and uncharged particle and very difficult to encounter, or the Higgs Boson — the particle implicated in the inertia of matter and difficult to create — have not been observed and documented.

To complete the understanding of even the standard model, we need to see how matter behaves at distances that are even smaller than the closest we have seen so far. As all matter is repulsive at an extremely short distance, we need to create very high energies to bring particles close enough.

This is the motivation for the high-energy accelerators that have been constructed over the decades and the greatest of them all is the Large Hadron Collider at the European Organization for Nuclear Research — more commonly known by its acronym, Cern — near Geneva.

The LHC, over its course of 27 km, accelerates protons to nearly the speed of light, so that their collision would have the energy required to create new, massive particles, like the Higgs. While the Higgs itself is a rare event, there are billions of other products of the collisions, which need to be detected and recorded.

The authors of the Nature article write that the arrays of sensors in the LHC contain some 200 million detection elements and the data they produce, after drastic data reduction and compression is as much every hour as Google handles in a year!

It is not possible to process such quantities of data in the ordinary way. An early large data handling challenge faced was while mapping the 3.3 billion base pairs of the human genome.

One of the methods used was to press huge numbers of computers, worldwide into action. That was through a programme in which all computers connected to the Internet could participate and contribute their idle time (between keystrokes for instance) to the effort.

Such methods, however, would not be effective with the data that is generated by the LHC. Even before the data is processed, the Nature paper says, the data coming in is filtered so that only one item out of 100,000 is retained. While machine-learning methods are used to handle the data finally used, even the hardware that does the initial filtering uses machine language routines, the paper says.

Machine learning consists of computer and statistical techniques to search for patterns or significant trends within massive data, where conventional methods of data analysis are not feasible. In simple applications, a set of known data is analysed to find a mathematical formula that fits their distribution. The formula is then tested on some known examples and refined, so that it makes correct predictions with unknown data too.

The technique can then be used to devise marketing strategies, weather forecasts and automated clinical diagnoses. While powerful computers were able to process huge data and perform well, without taking too long over it, it was realised there were situations where the animal brain could do better.

The animal brain does not use the linear method, of fully analysing data, of the conventional computer, but “trains itself” to interpret significant data elements and trim its responses on the basis of how good the predictions are.

Computer programmes were hence written to simulate the animal brain, in the form of “neural networks” or computers that behave like nerve cells. In a simple instance of recognising just one feature, it could be presented to a single virtual neuron.

The neuron responds at random from a set of choices. If the answer is correct, there is feedback that adds to the probability of that response, and if the answer is wrong, the feedback lowers the probability.

We can see that this device would soon learn, through a random process, to consistently make the correct response. A brace of artificial neurons that send responses to another set of neurons, and so on, could deal with several inputs with greater complexity. A network like this could learn to identify an image as being that of a car or a pedestrian, for instance, and if a pedestrian, whether it is a man or woman!

This architecture is now adapted in the LHC to make out which data to keep for further analysis and what to discard. The paper says that suitable algorithms, and neural networks, have been developed for satisfying “the stringent robustness requirements of a system that makes irreversible decisions (like discard of data).”

The paper says that the machine learning methods have enabled rapid processing of data that would have taken many years otherwise. Apart from the need for speed, the algorithms used need to be adapted to the specific signatures that are being looked for. The decay products, for instance, that could signal the very rare Higgs Boson event, among others.

The paper says that the data handling demands will only increase, as the data from the LHC will grow by an order of magnitudes within a decade, “resulting in much higher data rates and even more complex events to disentangle.” The machine learning community is hence at work to discover new and more powerful techniques, the paper says.

Discoveries in physics have traditionally been data based. A turning point in the history of science could be when Copernicus firmly established that Earth and the planets went around the Sun and not that the Sun and planets went around the Earth.

Copernicus came to this epochal conclusion entirely on the basis of massive astronomical data collected by his predecessor, Tycho Brahe. The data, collected with rudimentary instrumentation, was voluminous and the analysis was painstaking.

In contrast, we are now using massively sophisticated computing power and much more expensive data acquiring methods. While the puzzle solved by Tycho Brahe and Copernicus was basic and changed the course of science, the problem now being looked at is of a complexity and character that could not have been imagined even a century ago.

The writer can be contacted at response@simplescience.in

Advertisement